1. Introduction¶

1.1. Overview¶

The objective of this Reference Architecture (RA) is to develop a usable Kubernetes-based platform for the Telco industry. The RA will be based on the standard Kubernetes platform wherever possible. This Reference Architecture for Kubernetes will describe the high-level system components and their interactions, taking the goals and requirements from the Cloud Infrastructure Reference Model [1] (RM) and mapping them to Kubernetes (and related) components. This document needs to be sufficiently detailed and robust such that it can be used to guide the production deployment of Kubernetes within an operator, whilst being flexible enough to evolve with and remain aligned with the wider Kubernetes ecosystem outside of Telco.

To set this in context, it makes sense to start with the high-level definition and understanding of Kubernetes. Kubernetes [2] is a “portable, extensible, open-source platform for managing containerised workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available” [3]. Kubernetes is developed as an open source project in the kubernetes [4] repository of GitHub.

To assist with the goal of creating a reference architecture that will support Telco workloads, but at the same time leverage the work that already has been completed in the Kubernetes community, RA2 will take an “RA2 Razor” approach to build the foundation. This can be explained along the lines of “if something is useful for non-Telco workloads, we will not include it only for Telco workloads”. For example, start the Reference Architecture from a vanilla Kubernetes (say, v1.16) feature set, then provide clear evidence that a functional requirement cannot be met by that system (say, multi-NIC support), only then the RA would add the least invasive, Kubernetes-community aligned extension (say, Multus) to fill the gap. If there are still gaps that cannot be filled by standard Kubernetes community technologies or extensions then the RA will concisely document the requirement in the Introduction to Gaps, Innovation, and Development chapter of this document and approach the relevant project maintainers with a request to add this functionality into the feature set.

The Kubernetes Reference Architecture will be used to determine a Kubernetes Reference Implementation. The Kubernetes Reference Implementation would then also be used to test and validate the supportability and compatibility with Kubernetes-based Network Function workloads, and lifecycle management of Kubernetes clusters, of interest to the Anuket community. The intention is to expand as much of the existing test frameworks to be used for the verification and conformance testing of Kubernetes-based workloads, and Kubernetes cluster lifecycle management.

1.1.1. Required component versions¶

Component |

Required version(s) |

|---|---|

Kubernetes |

1.29 |

1.1.2. Principles¶

1.1.2.1. Architectural principles¶

This Reference Architecture conforms with the Anuket principles:

Open-source preference: for building Cloud Infrastructure solutions, components and tools, using open-source technology.

Open APIs: to enable interoperability, component substitution, and minimise integration efforts.

Separation of concerns: to promote lifecycle independence of different architectural layers and modules (e.g., disaggregation of software from hardware).

Automated lifecycle management: to minimise the end-to-end lifecycle costs, maintenance downtime (target zero downtime), and errors resulting from manual processes.

Automated scalability: of workloads to minimise costs and operational impacts.

Automated closed loop assurance: for fault resolution, simplification, and cost reduction of cloud operations.

Cloud nativeness: to optimise the utilisation of resources and enable operational efficiencies.

Security compliance: to ensure the architecture follows the industry best security practices and is at all levels compliant to relevant security regulations.

Resilience and Availability: to withstand Single Point of Failure.

1.1.2.2. Cloud Native Principles¶

For the purposes of this document, the CNCF TOC’s (Technical Oversight Committee) definition of Cloud Native applies:

CNCF Cloud Native Definition v1.0 Approved by TOC: 2018-06-11

“Cloud native technologies empower organizations to build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure, and declarative APIs exemplify this approach.

These techniques enable loosely coupled systems that are resilient, manageable, and observable. Combined with robust automation, they allow engineers to make high-impact changes frequently and predictably with minimal toil.

The Cloud Native Computing Foundation seeks to drive adoption of this paradigm by fostering and sustaining an ecosystem of open source, vendor-neutral projects. We democratize state-of-the-art patterns to make these innovations accessible for everyone.”

The CNCF TUG (Telecom User Group), formed in June 2019, published a set of Cloud Native Principles suited to the requirements of the Telecom community [5]. There are many similarities with the CNCF principles, briefly that infrastructure needs to be:

scalable

dynamic environments

service meshes

microservices

immutable infrastructure

declarative APIs

loosely coupled

resilient

manageable

observable

robust automation

high-impact changes frequently and predictably

1.1.2.3. Exceptions¶

Anuket specifications define certain policies and general principles and strive to .. add general principles from common coalesce the industry towards conformant Cloud Infrastructure technologies and configurations. With the currently available technology options, incompatibilities, performance, and operator constraints (including costs), these policies and principles may not always be achievable and, thus, require an exception process. These policies describe how to handle non-conforming technologies. .. add policies:anuket project policies for managing non-conforming technologies from common In general, non-conformance with policies is handled through a set of exceptions. .. add gov/chapters/chapter09:exception types

The following sub-sections list the exceptions to the principles of Anuket specifications and shall be updated whenever technology choices, versions and requirements change. The Exceptions have an associated period of validity and this period shall include time for transitioning.

1.1.2.3.1. Technology Exceptions¶

The list of Technology Exceptions will be updated or removed when alternative technologies, aligned with the principles of Anuket specifications, develop and mature.

Ref |

Name |

Description |

Valid Until |

Rationale |

Implication |

|---|---|---|---|---|---|

ra2.exc.tec.001 |

SR-IOV |

This exception allows workloads to use SR-IOV over PCI-PassThrough technology. |

TBD |

Emulation of virtual devices for each virtual machine creates an I/O bottleneck resulting in poor performance and limits the number of virtual machines a physical server can support. SR-IOV implements virtual devices in hardware, and by avoiding the use of a switch, near maximal performance can be achieved. For containerisation the downsides of creating dependencies on hardware is reduced as Kubernetes nodes are either physical, or if virtual have no need to “live migrate” as a VNF VM might. |

1.1.3. Approach¶

The approach taken in this Reference Architecture is to start with a basic Kubernetes architecture, based on the community distribution, and then add detail and additional features/extensions as is required to meet the requirements of the Reference Model and the functional and non-functional requirements of common cloud native network functions.

This document starts with a description of interfaces and capabilities requirements (the “what”) before providing guidance on “how” those elements are deployed, through specifications. The details of how the elements will be used together are documented in full detail in the Reference Implementation.

1.2. Scope¶

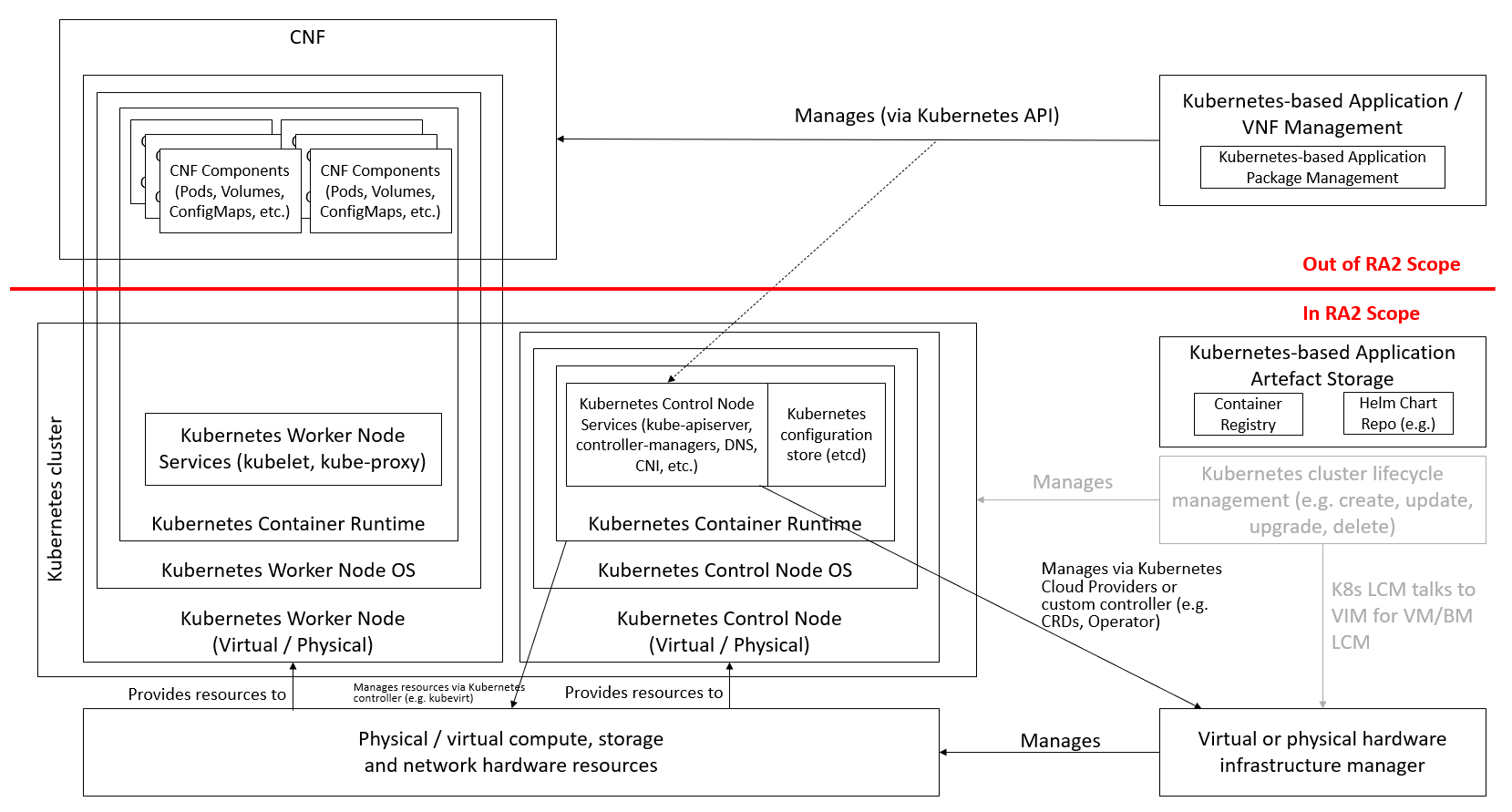

The scope of this particular Reference Architecture can be described as follows (the capabilities themselves will be listed and described in subsequent chapters):

Kubernetes platform capabilities required to conform to the Reference Model requirements

Support for CNFs that consist wholly of containers

Support for CNFs that consist partly of containers and partly of VMs, both of which will be orchestrated by Kubernetes

Kubernetes Cluster lifecycle management: including Cluster creation/upgrade/scaling/deletion, and node customisation due to workload requirements.

The following items are considered out of scope:

Kubernetes-based Application / CNF Management: this is an application layer capability that is out of scope of Anuket.

Figure 1.1 Kubernetes Reference Architecture scope¶

1.3. Definitions¶

Term |

Description |

|---|---|

Abstraction |

Process of removing concrete, fine-grained or lower-level details or attributes or common properties in the study of systems to focus attention on topics of greater importance or general concepts. It can be the result of decoupling. |

Anuket |

A LFN open-source project developing open reference infrastructure models, architectures, tools, and programs. |

CaaS Containers as a Service |

A Platform suitable to host and run Containerised workloads, such as Kubernetes. Instances of CaaS Platforms are known as CaaS Clusters. |

CaaS Manager |

A management plane function that manages the lifecycle (instantiation, scaling, healing, etc.) of one or more CaaS instances, including communication with VIM for master/node lifecycle management. |

Cloud Infrastructure |

A generic term covering NFVI, IaaS and CaaS capabilities - essentially the infrastructure on which a Workload can be executed. NFVI, IaaS and CaaS layers can be built on top of each other. In case of CaaS some cloud infrastructure features (e.g.: HW management or multitenancy) are implemented by using an underlying IaaS* layer. |

Cloud Infrastructure Hardware Profile |

Defines the behaviour, capabilities, configuration, and metrics provided by a cloud infrastructure hardware layer resources available for the workloads. |

Cloud Infrastructure Profile |

The combination of the Cloud Infrastructure Software Profile and the Cloud Infrastructure Hardware Profile that defines the capabilities and configuration of the Cloud Infrastructure resources available for the workloads. |

Cloud Infrastructure Software Profile |

Defines the behaviour, capabilities and metrics provided by a Cloud Infrastructure Software Layer on resources available for the workloads. |

Cloud Native Network Function (CNF) |

A cloud native network function (CNF) is a cloud native application that implements network functionality. A CNF consists of one or more microservices. All layers of a CNF are developed using Cloud Native Principles including immutable infrastructure, declarative APIs, and a “repeatable deployment process”. This definition is derived from the Cloud Native Thinking for Telecommunications Whitepaper, which also includes further detail and examples. |

Compute Node |

An abstract definition of a server. A compute node can refer to a set of hardware and software that support the VMs or Containers running on it. |

Container |

A lightweight and portable executable image that contains software and all its dependencies. OCI defines Container as “An environment for executing processes with configurable isolation and resource limitations. For example, namespaces, resource limits, and mounts are all part of the container environment.” A Container provides operating-system-level virtualisation by abstracting the “user space”. One big difference between Containers and VMs is that unlike VMs, where each VM is self-contained with all the operating systems components are within the VM package, containers “share” the host system’s kernel with other containers. |

Container Engine |

Software components used to create, destroy, and manage containers on top of an operating system. |

Container Image |

Stored instance of a container that holds a set of software needed to run an application. |

Container Runtime |

The software that is responsible for running containers. It reads the configuration files for a Container from a directory structure, uses that information to create a container, launches a process inside the container, and performs other lifecycle actions. |

Core (physical) |

An independent computer processing unit that can independently execute CPU instructions and is integrated with other cores on a multiprocessor (chip, integrated circuit die). Please note that the multiprocessor chip is also referred to as a CPU that is placed in a socket of a computer motherboard. |

CPU Type |

A classification of CPUs by features needed for the execution of computer programs; for example, instruction sets, cache size, number of cores. |

Decoupling, Loose Coupling |

Loosely coupled system is one in which each of its components has, or makes use of, little or no knowledge of the implementation details of other separate components. Loose coupling is the opposite of tight coupling |

Encapsulation |

Restriction of direct access to some of an object’s components. |

External Network |

External networks provide network connectivity for a cloud infrastructure tenant to resources outside of the tenant space. |

Fluentd |

An open-source data collector for unified logging layer, which allows data collection and consumption for better use and understanding of data. Fluentd is a CNCF graduated project. |

Functest |

An open-source project part of Anuket LFN project. It addresses functional testing with a collection of state-of-the-art virtual infrastructure test suites, including automatic VNF testing. |

Hardware resources |

Compute, storage and network hardware resources on which the cloud infrastructure platform software, virtual machines and containers run on. |

Host Profile |

Is another term for a Cloud Infrastructure Hardware Profile. |

Huge pages |

Physical memory is partitioned and accessed using the basic page unit (in Linux default size of 4 KB). Huge pages, typically 2MB and 1GB size, allows large amounts of memory to be utilised with reduced overhead. In an NFV environment, huge pages are critical to support large memory pool allocation for data packet buffers. This results in fewer Translation Lookaside Buffers (TLB) lookups, which reduces the virtual to physical pages’ address translations. Without huge pages enabled high TLB miss rates would occur thereby degrading performance. |

Hypervisor |

A software that abstracts and isolates workloads with their own operating systems from the underlying physical resources. Also known as a virtual machine monitor (VMM). |

Instance |

Is a virtual compute resource, in a known state such as running or suspended, that can be used like a physical server. It can be used to specify VM Instance or Container Instance. |

Kibana |

An open-source data visualisation system. |

Kubernetes |

An open-source system for automating deployment, scaling, and management of containerised applications. |

Kubernetes Cluster |

A set of machines, called nodes (either workers or control plane), that run containerised applications managed by Kubernetes. |

Kubernetes Control Plane |

The container orchestration layer that exposes the API and interfaces to define, deploy, and manage the lifecycle of containers. |

Kubernetes Node |

A node is a worker machine in Kubernetes. A worker node may be a VM or physical host, depending on the cluster. It has local daemons or services necessary to run Pods and is managed by the control plane. |

Kubernetes Service |

An abstract way to expose an application running on a set of Pods as a Kubernetes network service. |

Monitoring (Capability) |

Monitoring capabilities are used for the passive observation of workload-specific traffic traversing the Cloud Infrastructure. Note, as with all capabilities, Monitoring may be unavailable or intentionally disabled for security reasons in a given cloud infrastructure instance. |

Multi-tenancy |

Feature where physical, virtual or service resources are allocated in such a way that multiple tenants and their computations and data are isolated from and inaccessible by each other. |

Network Function (NF) |

Functional block or application that has well-defined external interfaces and well-defined functional behaviour. Within NFV, a Network Function is implemented in a form of Virtualised NF (VNF) or a Cloud Native NF (CNF). |

NFV Orchestrator (NFVO) |

Manages the VNF lifecycle and Cloud Infrastructure resources (supported by the VIM) to ensure an optimised allocation of the necessary resources and connectivity. |

Network Function Virtualisation (NFV) |

The concept of separating network functions from the hardware they run on by using a virtual hardware abstraction layer. |

Network Function Virtualisation Infrastructure (NFVI) |

The totality of all hardware and software components used to build the environment in which a set of virtual applications (VAs) are deployed; also referred to as cloud infrastructure. The NFVI can span across many locations, e.g., places where data centres or edge nodes are operated. The network providing connectivity between these locations is regarded to be part of the cloud infrastructure. NFVI and VNF are the top-level conceptual entities in the scope of Network Function Virtualisation. All other components are sub-entities of these two main entities. |

Network Service (NS) |

Composition of Network Function(s) and/or Network Service(s), defined by its functional and behavioural specification, including the service lifecycle. |

Open Network Automation Platform (ONAP) |

An LFN project developing a comprehensive platform for orchestration, management, and automation of network and edge computing services for network operators, cloud providers, and enterprises. |

ONAP OpenLab |

ONAP community lab. |

Open Platform for NFV (OPNFV) |

A collaborative project under the Linux Foundation. OPNFV is now part of the LFN Anuket project. It aims to implement, test, and deploy tools for conformance and performance of NFV infrastructure. |

OPNFV Verification Program (OVP) |

An open-source, community-led compliance and verification program aiming to demonstrate the readiness and availability of commercial NFV products and services using OPNFV and ONAP components. |

Platform |

A cloud capabilities type in which the cloud service user can deploy, manage and run customer-created or customer-acquired applications using one or more programming languages and one or more execution environments supported by the cloud service provider. Adapted from ITU-T Y.3500. This includes the physical infrastructure, Operating Systems, virtualisation/containerisation software and other orchestration, security, monitoring/logging and life-cycle management software. |

Pod |

The smallest and simplest Kubernetes object. A Pod represents a set of running containers on a cluster. A Pod is typically set up to run a single primary container. It can also run optional sidecar containers that add supplementary features like logging. |

Prometheus |

An open-source monitoring and alerting system. |

Quota |

An imposed upper limit on specific types of resources, usually used to prevent excessive resource consumption by a given consumer (tenant, VM, container). |

Resource pool |

A logical grouping of cloud infrastructure hardware and software resources. A resource pool can be based on a certain resource type (for example, compute, storage and network) or a combination of resource types. A Cloud Infrastructure resource can be part of none, one or more resource pools. |

Simultaneous Multithreading (SMT) |

Simultaneous multithreading (SMT) is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading. SMT permits multiple independent threads of execution on a single core to better utilise the resources provided by modern processor architectures. |

Tenant |

Cloud service users sharing access to a set of physical and virtual resources, ITU-T Y.3500. Tenants represent an independently manageable logical pool of compute, storage and network resources abstracted from physical hardware. |

Tenant Instance |

Refers to an Instance owned by or dedicated for use by a single Tenant. |

Tenant (Internal) Networks |

Virtual networks that are internal to Tenant Instances. |

User |

Natural person, or entity acting on their behalf, associated with a cloud service customer that uses cloud services. Examples of such entities include devices and applications. |

Virtual CPU (vCPU) |

Represents a portion of the host’s computing resources allocated to a virtualised resource, for example, to a virtual machine or a container. One or more vCPUs can be assigned to a virtualised resource. |

Virtualised Infrastructure Manager (VIM) |

Responsible for controlling and managing the Network Function Virtualisation Infrastructure (NFVI) compute, storage and network resources. |

Virtual Machine (VM) |

Virtualised computation environment that behaves like a physical computer/server. A VM consists of all of the components (processor (CPU), memory, storage, interfaces/ports, etc.) of a physical computer/server. It is created using sizing information or Compute Flavour. |

Virtualised Network Function (VNF) |

A software implementation of a Network Function, capable of running on the Cloud Infrastructure. VNFs are built from one or more VNF Components (VNFC) and, in most cases, the VNFC is hosted on a single VM or Container. |

Workload |

An application (for example VNF, or CNF) that performs certain task(s) for the users. In the Cloud Infrastructure, these applications run on top of compute resources such as VMs or Containers. |

1.4. Abbreviations¶

Term |

Description |

|---|---|

API |

Application Programming Interface |

BGP VPN |

Border gateway Protocol Virtual Private network |

CaaS |

Containers as a Service |

CI/CD |

Continuous Integration/Continuous Deployment |

CNF |

Containerised Network Function |

CNTT |

Cloud iNfrastructure Telco Task Force |

CPU |

Central Processing Unit |

DNS |

Domain Name System |

DPDK |

Data Plane Development Kit |

DHCP |

Dynamic Host Configuration Protocol |

ECMP |

Equal Cost Multi-Path routing |

ETSI |

European Telecommunications Standards Institute |

FPGA |

Field Programmable Gate Array |

MB/GB/TB |

MegaByte/GigaByte/TeraByte |

GPU |

Graphics Processing Unit |

GRE |

Generic Routing Encapsulation |

GSM |

Global System for Mobile Communications (originally Groupe Spécial Mobile) |

GSMA |

GSM Association |

GSLB |

Global Service Load Balancer |

GUI |

Graphical User Interface |

HA |

High Availability |

HDD |

Hard Disk Drive |

HTTP |

Hypertext Transfer Protocol |

HW |

Hardware |

IaaC (also IaC) |

Infrastructure as a Code |

IaaS |

Infrastructure as a Service |

ICMP |

Internet Control Message Protocol |

IMS |

IP Multimedia Sub System |

IO |

Input/Output |

IOPS |

Input/Output per Second |

IPMI |

Intelligent Platform Management Interface |

KVM |

Kernel-based Virtual Machine |

LCM |

Life Cycle Management |

LDAP |

Lightweight Directory Access Protocol |

LFN |

Linux Foundation Networking |

LMA |

Logging, Monitoring and Analytics |

LVM |

Logical Volume Management |

MANO |

Management And Orchestration |

MLAG |

Multi-chassis Link Aggregation Group |

NAT |

Network Address Translation |

NFS |

Network File System |

NFV |

Network Function Virtualisation |

NFVI |

Network Function Virtualisation Infrastructure |

NIC |

Network Interface Card |

NPU |

Numeric Processing Unit |

NTP |

Network Time Protocol |

NUMA |

Non-Uniform Memory Access |

OAI |

Open Air Interface |

OS |

Operating System |

OSTK |

OpenStack |

OPNFV |

Open Platform for NFV |

OVS |

Open vSwitch |

OWASP |

Open Web Application Security Project |

PCIe |

Peripheral Component Interconnect Express |

PCI-PT |

PCIe Passthrough |

PXE |

Preboot Execution Environment |

QoS |

Quality of Service |

RA |

Reference Architecture |

RA-2 |

Reference Architecture 2 (i.e., Reference Architecture for Kubernetes-based Cloud Infrastructure) |

RBAC |

Role-based Access Control |

RBD |

RADOS Block Device |

REST |

Representational state transfer |

RI |

Reference Implementation |

RM |

Reference Model |

SAST |

Static Application Security Testing |

SDN |

Software Defined Networking |

SFC |

Service Function Chaining |

SG |

Security Group |

SLA |

Service Level Agreement |

SMP |

Symmetric Multiprocessing |

SMT |

Simultaneous Multithreading |

SNAT |

Source Network Address Translation |

SNMP |

Simple Network Management Protocol |

SR-IOV |

Single Root Input Output Virtualisation |

SSD |

Solid State Drive |

SSL |

Secure Sockets Layer |

SUT |

System Under Test |

TCP |

Transmission Control Protocol |

TLS |

Transport Layer Security |

ToR |

Top of Rack |

TPM |

Trusted Platform Module |

UDP |

User Data Protocol |

VIM |

Virtualised Infrastructure Manager |

VLAN |

Virtual LAN |

VM |

Virtual Machine |

VNF |

Virtual Network Function |

VRRP |

Virtual Router Redundancy Protocol |

VTEP |

VXLAN Tunnel End Point |

VXLAN |

Virtual Extensible LAN |

WAN |

Wide Area Network |

ZTA |

Zero Trust Architecture |

1.5. References¶

Reference model for cloud infrastructure (rm). GSMA PRD NG.126 v3.0, 2022.

Kubernetes documentation. URL: https://kubernetes.io/docs/home/.

What is kubernetes. URL: https://kubernetes.io/docs/concepts/overview/.

Kubernetes github repository. URL: https://github.com/kubernetes/kubernetes.

Expanded cloud native principles. URL: https://networking.cloud-native-principles.org/cloud-native-principles.

Scott O. Bradner. Key words for use in rfcs to indicate requirement levels. RFC 2119, March 1997. URL: https://www.rfc-editor.org/info/rfc2119, doi:10.17487/RFC2119.

Cis password policy guide. URL: https://www.cisecurity.org/insights/white-papers/cis-password-policy-guide.

Cis controls list. URL: https://www.cisecurity.org/controls/cis-controls-list.

Cve - common vulnerabilities and exposures. URL: https://cve.mitre.org/.

Sbom - software bill of materials. URL: https://ntia.gov/page/software-bill-materials.

Cloud security alliance. URL: https://cloudsecurityalliance.org/.

Owasp cheat sheet series (ocss). URL: https://github.com/OWASP/CheatSheetSeries/.

Owasp top ten security risks. URL: https://owasp.org/www-project-top-ten/.

Owasp software maturity model (samm). URL: https://owaspsamm.org/.

Owasp web security testing guide. URL: https://github.com/OWASP/wstg/.

Iso/iec 27001. URL: https://www.iso.org/obp/ui/#iso:std:iso-iec:27001:ed-2:v1:en.

Iso/iec 27032. URL: https://www.iso.org/obp/ui/#iso:std:iso-iec:27032:ed-1:v1:en.

Cncf kubernetes conformance. URL: https://github.com/cncf/k8s-conformance.

Open container initiative (oci) runtime spec. URL: https://github.com/opencontainers/runtime-spec.

Kubernetes docs - pod. URL: https://kubernetes.io/docs/concepts/workloads/pods.

Kubernetes docs - replicaset. URL: https://kubernetes.io/docs/concepts/workloads/controllers/replicaset.

Kubernetes docs - deployment. URL: https://kubernetes.io/docs/concepts/workloads/controllers/deployment.

Kubernetes docs - daemonset. URL: https://kubernetes.io/docs/concepts/workloads/controllers/daemonset.

Kubernetes docs - job. URL: https://kubernetes.io/docs/concepts/workloads/controllers/job.

Kubernetes docs - cronjob. URL: https://kubernetes.io/docs/concepts/workloads/controllers/cron-jobs.

Kubernetes docs - statefulset. URL: https://kubernetes.io/docs/concepts/workloads/controllers/statefulset.

Kubernetes docs - cpu manager. URL: https://kubernetes.io/docs/tasks/administer-cluster/cpu-management-policies.

Kubernetes docs - huge pages. URL: https://kubernetes.io/docs/tasks/manage-hugepages/scheduling-hugepages.

Kubernetes docs - memory manager. URL: https://kubernetes.io/docs/tasks/administer-cluster/memory-manager.

Kubernetes docs - topology manager. URL: https://kubernetes.io/docs/tasks/administer-cluster/topology-manager.

Kubernetes node feature discovery. URL: https://kubernetes-sigs.github.io/node-feature-discovery/.

Kubernetes docs - device plugin framework. URL: https://kubernetes.io/docs/concepts/extend-kubernetes/compute-storage-net/device-plugins.

Kubernetes sr-iov network device plugin. URL: https://github.com/k8snetworkplumbingwg/sriov-network-device-plugin.

Github: multus-cni. URL: https://github.com/k8snetworkplumbingwg/multus-cni.

Cert-manager. URL: https://cert-manager.io/.

Kubernetes docs - network plugins. URL: https://kubernetes.io/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins.

Kubernetes network custom resource definition. URL: https://github.com/k8snetworkplumbingwg/multi-net-spec.

Tungsten fabric. URL: https://tungsten.io/.

Af_xdp device plugin. URL: https://github.com/intel/afxdp-plugins-for-kubernetes.

Cloud native data plane. URL: https://cndp.io/.

Dpdk af_xdp poll mode driver. URL: https://doc.dpdk.org/guides/nics/af_xdp.html.

Kubernetes docs - ingress. URL: https://kubernetes.io/docs/concepts/services-networking/ingress.

Kubernetes docs - service. URL: https://kubernetes.io/docs/concepts/services-networking/service.

Kubernetes docs - endpointslices. URL: https://kubernetes.io/docs/concepts/services-networking/endpoint-slices.

Kubernetes docs - network policies. URL: https://kubernetes.io/docs/concepts/services-networking/network-policies.

Container network interface. URL: https://github.com/containernetworking/cni.

Kubernetes network special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-network.

Kubernetes network plumbing working group. URL: https://github.com/k8snetworkplumbingwg/community.

Helm documentation. URL: https://helm.sh/docs.

Kubernetes docs - custom resources. URL: https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/custom-resources.

Kubernetes docs - custom resource definitions. URL: https://kubernetes.io/docs/tasks/extend-kubernetes/custom-resources/custom-resource-definitions.

Kubernetes docs - api server aggregation. URL: https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/apiserver-aggregation.

Kubernetes docs - custom controllers. URL: https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/custom-resources/#custom-controllers.

Kubernetes docs - operator pattern. URL: https://kubernetes.io/docs/concepts/extend-kubernetes/operator.

Operator hub. URL: https://operatorhub.io.

Reference implementation based on ra2 specifications (ri2). URL: https://cntt.readthedocs.io/projects/ri2/.

Reference architecture (ra1) for openstack based cloud infrastructure. URL: https://cntt.readthedocs.io/projects/ra1/.

Node feature discovery. URL: https://kubernetes-sigs.github.io/node-feature-discovery/stable/get-started/index.html.

Kubernetes distributions and platforms document. URL: https://docs.google.com/spreadsheets/d/1uF9BoDzzisHSQemXHIKegMhuythuq_GL3N1mlUUK2h0/.

Kubernetes supported versions. URL: https://kubernetes.io/releases/version-skew-policy/#supported-versions.

Kubernetes alpha api. URL: https://kubernetes.io/docs/reference/using-api/#api-versioning.

Kubernetes publishing services (servicetypes). URL: https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types.

Kubernetes ingress. URL: https://kubernetes.io/docs/concepts/services-networking/ingress/.

Open container initiative runtime specification, version 1.0.0. URL: https://github.com/opencontainers/runtime-spec/blob/v1.0.0/spec.md.

Kubernetes: introducing container runtime interface (cri) in kubernetes. URL: https://kubernetes.io/blog/2016/12/container-runtime-interface-cri-in-kubernetes/.

Kubernetes network custom resource definition de-facto standard. URL: https://github.com/k8snetworkplumbingwg/multi-net-spec/tree/master/v1.2.

Kubernetes csi drivers. URL: https://kubernetes-csi.github.io/docs/drivers.html.

Application service descriptor (asd) and packaging proposals for cnf. URL: https://wiki.onap.org/display/DW/Application+Service+Descriptor+%28ASD%29+and+packaging+Proposals+for+CNF.

Etsi gs nfv-sol 001: nfv descriptors based on tosca specification. ETSI GS NFV-SOL 001, 2022. URL: https://www.etsi.org/deliver/etsi_gs/NFV-SOL/001_099/001/04.02.01_60/gs_NFV-SOL001v040201p.pdf.

Kubernetes types of volumes, hostpath. URL: https://kubernetes.io/docs/concepts/storage/volumes/#hostpath.

Cnf testsuite, rationale, test if the helm chart is published: helm_chart_published. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-helm-chart-is-published-helm_chart_published.

Cnf testsuite, rationale, test if the helm chart is valid: helm_chart_valid. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-helm-chart-is-valid-helm_chart_valid.

Cnf testsuite, rationale, to test if the cnf can perform a rolling update: rolling_update. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-test-if-the-cnf-can-perform-a-rolling-update-rolling_update.

Cnf testsuite, rationale, to check if a cnf version can be downgraded through a rolling_downgrade: rolling_downgrade. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-a-cnf-version-can-be-downgraded-through-a-rolling_downgrade-rolling_downgrade.

Cnf testsuite, rationale, to check if the cnf is compatible with different cnis: cni_compatibility. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-the-cnf-is-compatible-with-different-cnis-cni_compatibility.

Cnf testsuite, rationale, test if the cnf crashes when node drain occurs: node_drain. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-cnf-crashes-when-node-drain-occurs-node_drain.

Cnf testsuite, rationale, test if the cnf crashes when network latency occurs: pod_network_latency. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-cnf-crashes-when-network-latency-occurs-pod_network_latency.

Cnf testsuite, rationale, test if the cnf crashes when disk fill occurs: disk_fill. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-cnf-crashes-when-disk-fill-occurs.

Cnf testsuite, rationale, test if the cnf crashes when pod memory hog occurs: pod_memory_hog. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-cnf-crashes-when-pod-memory-hog-occurs-pod_memory_hog.

Cnf testsuite, rationale, test if the cnf crashes when pod io stress occurs: pod_io_stress. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-cnf-crashes-when-pod-io-stress-occurs-pod_io_stress.

Cnf testsuite, rationale, test if the cnf crashes when pod network corruption occurs: pod_network_corruption. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-cnf-crashes-when-pod-network-corruption-occurs-pod_network_corruption.

Cnf testsuite, rationale, test if the cnf crashes when pod network duplication occurs: pod_network_duplication. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#test-if-the-cnf-crashes-when-pod-network-duplication-occurs-pod_network_duplication.

Cnf testsuite, rationale, to test if the cnf uses local storage: no_local_volume_configuration. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-test-if-the-cnf-uses-local-storage-no_local_volume_configuration.

Cnf testsuite, rationale, to test if there is a liveness entry in the helm chart: liveness. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-test-if-there-is-a-liveness-entry-in-the-helm-chart-liveness.

Cnf testsuite, rationale, to test if there is a readiness entry in the helm chart: readiness. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-test-if-there-is-a-readiness-entry-in-the-helm-chart-readiness.

Cnf testsuite, rationale, to check if there is automatic mapping of service accounts: service_account_mapping. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-there-is-automatic-mapping-of-service-accounts-service_account_mapping.

Cnf testsuite, rationale, to check if there is a host network attached to a pod: host_network. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-there-is-a-host-network-attached-to-a-pod-host_network.

Cnf testsuite, rationale, to check if containers are running with hostpid or hostipc privileges: host_pid_ipc_privileges. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-containers-are-running-with-hostpid-or-hostipc-privileges-host_pid_ipc_privileges.

Cnf testsuite, rationale, to check if containers have resource limits defined: resource_policies. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-containers-have-resource-limits-defined-resource_policies.

Cnf testsuite, rationale, to check if containers have immutable file systems: immutable_file_systems. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-containers-have-immutable-file-systems-immutable_file_systems.

Kubernetes documentation: images. URL: https://kubernetes.io/docs/concepts/containers/images/.

Cnf testsuite, rationale, to test if there are any (non-declarative) hardcoded ip addresses or subnet masks in the k8s runtime configuration: hardcoded_ip_addresses_in_k8s_runtime_configuration. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-test-if-there-are-any-non-declarative-hardcoded-ip-addresses-or-subnet-masks-in-the-k8s-runtime-configuration-hardcoded_ip_addresses_in_k8s_runtime_configuration.

Kubernetes documentation: service. URL: https://kubernetes.io/docs/concepts/services-networking/service/.

Kubernetes documentation: immutable configmaps. URL: https://kubernetes.io/docs/concepts/configuration/configmap/#configmap-immutable.

Kubernetes documentation: horizontal pod autoscaling. URL: https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/.

Cnf testsuite, rationale, to check if the cnf has a reasonable image size: reasonable_image_size. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-the-cnf-has-a-reasonable-image-size-reasonable_image_size.

Cnf testsuite, rationale, to check if the cnf have a reasonable startup time: reasonable_startup_time. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-the-cnf-have-a-reasonable-startup-time-reasonable_startup_time.

Cnf testsuite, rationale, to check if there are any privileged containers: privileged_containers. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-there-are-any-privileged-containers-privileged_containers.

Cnf testsuite, rationale, to check if any containers are running as a root user (checks the user outside the container that is running dockerd): non_root_user. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-any-containers-are-running-as-a-root-user-checks-the-user-outside-the-container-that-is-running-dockerd-non_root_user.

Cnf testsuite, rationale, to check if any containers allow for privilege escalation: privilege_escalation. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-any-containers-allow-for-privilege-escalation-privilege_escalation.

Cnf testsuite, rationale, to check if containers are running with non-root user with non-root membership: non_root_containers. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-check-if-containers-are-running-with-non-root-user-with-non-root-membership-non_root_containers.

Kubernetes documentation: recommended labels. URL: https://kubernetes.io/docs/concepts/overview/working-with-objects/common-labels/.

The twelve factor app: logs. URL: https://12factor.net/logs.

Cnf testsuite, rationale, to test if there are host ports used in the service configuration: hostport_not_used. URL: https://github.com/cnti-testcatalog/testsuite/blob/main/RATIONALE.md#to-test-if-there-are-host-ports-used-in-the-service-configuration-hostport_not_used.

Kubernetes documentation: loadbalancer property of the kubernetes service. URL: https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancer.

Service mesh interface (smi). URL: https://smi-spec.io/.

Kubernetes documentation ports and protocols. URL: https://kubernetes.io/docs/reference/networking/ports-and-protocols/.

Building secure microservices-based applications using service-mesh architecture. NIST Special Publication 800-204A, 2020. URL: https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-204a.pdf.

Attribute-based access control for microservices-based applications using a service mesh. NIST Special Publication 800-204B, 2020. URL: https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-204B.pdf.

Arstechnica: tesla cloud resources are hacked to run cryptocurrency-mining malware. URL: https://arstechnica.com/information-technology/2018/02/tesla-cloud-resources-are-hacked-to-run-cryptocurrency-mining-malware/.

Kubernetes testing special interest group. URL: https://github.com/kubernetes/community/blob/master/sig-testing/charter.md.

Kubernetes end-to-end testing. URL: https://github.com/kubernetes/community/blob/master/contributors/devel/sig-testing/e2e-tests.md.

Kubernetes feature gates. URL: https://kubernetes.io/docs/reference/command-line-tools-reference/feature-gates/#feature-gates.

Kubernetes kep-3136. URL: https://github.com/kubernetes/enhancements/blob/master/keps/sig-architecture/3136-beta-apis-off-by-default/README.md.

Kubernetes api. URL: https://kubernetes.io/docs/reference/using-api/.

Kubernetes api reference. URL: https://kubernetes.io/docs/reference/kubernetes-api/.

Kubernetes api groups. URL: https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.29/.

Kubernetes api machinery special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-api-machinery.

Kubernetes feature crossnamespacepodaffinity. URL: https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#namespace-selector.

Kubernetes feature storageversionapi. URL: https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.23/#storageversion-v1alpha1-internal-apiserver-k8s-io.

Kubernetes apps special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-apps.

Kubernetes feature daemonsetupdatesurge. URL: https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.23/#rollingupdatedaemonset-v1-apps.

Kubernetes feature indexedjob. URL: https://kubernetes.io/docs/concepts/workloads/controllers/job/.

Kubernetes feature statefulset. URL: https://kubernetes.io/docs/concepts/workloads/controllers/statefulset/.

Kubernetes feature suspendjob. URL: https://kubernetes.io/docs/concepts/workloads/controllers/job/#suspending-a-job.

Kubernetes feature tainteviction. URL: https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/#taint-based-evictions.

Kubernetes feature ttlafterfinished. URL: https://kubernetes.io/docs/concepts/workloads/controllers/ttlafterfinished/.

Kubernetes auth special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-auth.

Kubernetes feature boundserviceaccounttokenvolume. URL: https://github.com/kubernetes/enhancements/blob/master/keps/sig-auth/1205-bound-service-account-tokens/README.md.

Kubernetes cluster lifecycle special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-cluster-lifecycle.

Kubernetes instrumentation special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-instrumentation.

Kubernetes network special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-network.

Kubernetes feature ipv6dualstack. URL: https://kubernetes.io/docs/concepts/services-networking/dual-stack/.

Kubernetes extend service ip ranges. URL: https://kubernetes.io/docs/tasks/network/extend-service-ip-ranges/.

Kubernetes node special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-node.

Kubernetes feature probeterminationgraceperiod. URL: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/#probe-level-terminationgraceperiodseconds.

Kubernetes feature downwardapihugepages. URL: https://kubernetes.io/docs/tasks/inject-data-application/downward-api-volume-expose-pod-information.

Kubernetes feature podreadinessgate. URL: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#pod-readiness-gate.

Kubernetes feature sysctls. URL: https://kubernetes.io/docs/tasks/administer-cluster/sysctl-cluster/.

Kubernetes scheduling special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-scheduling.

Kubernetes feature localstoragecapacityisolation. URL: https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/.

Kubernetes storage special interest group. URL: https://github.com/kubernetes/community/tree/master/sig-storage.

Alibaba cloud blog: what can we learn from twitter's move to kubernetes. URL: https://www.alibabacloud.com/blog/what-can-we-learn-from-twitters-move-to-kubernetes_595156.

Youtube: kubernetes failure stories, or: how to crash your cluster - henning jacobs. URL: https://www.youtube.com/watch?v=LpFApeaGv7A.

Cncf blog: demystifying kubernetes as a service – how alibaba cloud manages 10,000s of kubernetes clusters. URL: https://www.cncf.io/blog/2019/12/12/demystifying-kubernetes-as-a-service-how-does-alibaba-cloud-manage-10000s-of-kubernetes-clusters/.

Google docs: kep: multinetwork podnetwork object. URL: https://docs.google.com/document/d/17LhyXsEgjNQ0NWtvqvtgJwVqdJWreizsgAZHWflgP-A/edit.

Kubernetes docs: user namespaces. URL: https://kubernetes.io/docs/concepts/workloads/pods/user-namespaces/.

Kep-127: support user namespaces in stateless pods. URL: https://github.com/kubernetes/enhancements/tree/master/keps/sig-node/127-user-namespaces.

Wikipedia: linux namespaces. URL: https://en.wikipedia.org/wiki/Linux_namespaces.

1.6. Conventions¶

The key words “MUST”, “MUST NOT”, “required”, “SHALL”, SHALL NOT”, “SHOULD”, “SHOULD NOT”, “recommended”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119 [6].